Artificial Intelligence – Playful Decision Makers

by Mr Creosote (02 Jul 2011)Who is the Smartest?

Artificially Intelligent or Naturally Stupid?

Defining the term ‘intelligence’ (even with respect to human beings) is not a trivial task. Eventually, the only thing the experts in the field can agree on seems to be that it cannot be objectively decided what counts as ‘intelligent’, because there is no universally accepted definition. Standardised tests like the IQ scale are supposed to quantify the unknown, to make it measurable and comparable, but it is not even clear whether intelligence can be measured on a one-dimensional scale at all. This article is not supposed to decide about all the open questions of psychology and brain research (which this author would not be capable of anyway). It is concerned with articial intelligence (AI) which can be intuitively defined as the attempt to reproduce (or emulate) human behaviour as closely as possible.

There are two ways to achieve this: We can either analyse the process itself which is used to come up with decisions defined as intelligent (meaning we look at the origin of intelligence itself) or we evaluate the results of intelligent decision-making (meaning we look at the ‘output’ of the reference intelligence). The first method is the more exact one, but it is also much more complex. It would be a complete solution, because it goes directly to the root of the issue. If we knew how human intelligence works, these processes could be reproduced exactly, resulting in the perfect artificial intelligence model whose outputs could not be distinguished from actual humans. However, neither neuroscientists nor sociologists are able to provide the necessary answers and therefore, this is approach is a dead end for now.

The only option which remains is the observation of behaviour which we consider intelligent and the attempt to produce similar results by arbitrary means. They do not need to match the original ones, but they have to be produced within an autonomous decision process which is not externally controlled. The last aspect is important, because artificial intelligence is usually implemented on deterministic machines these days. Human brain activity, on the other hand, is probably better described as a stochastic process which can lead to different result on repetition. So the core issue is how to come up with a model which appears intelligent to human observers in spite of the basic differences in the way it gets there?

The Turing Test: Not Distinguishable from a Human Being?

The Litmus Test

In 1950, the mathematician Alan Turing rewrote this question in the form of a test: A human communicates with an unknown partner through a computer (in order to rule out visual and aural clues). She is not told whether it is another human or an artificial intelligence. If, after talking for some time, she cannot tell whether it was a human or a machine, although it actually was the latter, this artificial intelligence would have passed the Turing Test.

Turing himself postulated that in the year 2000, computers would be able to pass the test. As we all know, he was wrong: Even a good ten years later, no artificial intelligence – no matter how intricate – even comes close.

ELIZA

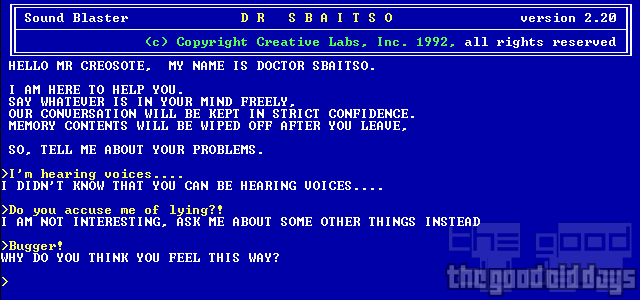

ELIZA was an application developed in 1966 whose goals were directly related to the Turing Test: The application reacts to natural language input from the user by providing answers or questions on its own. It is supposed to simulate a conversation.

The most popular version of ELIZA is the ‘psychotherapist’: This virtual partner in the conversation had a relatively easy job, because it simply analysed the user input semantically to extract the core of the sentence and put that into a question of its own. “I believe I’m being followed.” could have been answered with “Why do you believe you’re being followed?”.

On the one hand, according to the cliché, this is how many psychiatrists actually work – allegedly to get the patient towards self-reflection. On the other hand, this is not very convincing for a human being after a short time, because the ‘artificial intelligence’ visibly reacts only to keywords – sometimes putting them into a completely wrong context. So ELIZA was still far from passing the Turing Test. Nevertheless, it is significant as an early ‘playful’ experiment to which several modern application and game genres can be traced back.

Digital Winners: Superior to all Humankind?

The Turing Test’s goals are very ambitious and also in some ways questionable. The ability to communicate in a human way is not identical with intelligent behaviour. The test only covers the intersection between communication and intelligence and it rejects any non-verbally-communicative behaviour even if it could be otherwise described as intelligent. So even if the Turing Test still remains unsolved, it is still imaginable to have more specialised tasks calculated in an ‘intelligent’ way.

Only a short time ago, a computer called Watson got into the mainstream press when it broke all records in the game show Jeopardy. Very impressive, but does this already fall into the area of artificial intelligence? Jeopardy mainly requires an elaborate factual knowledge. This is where any modern information system can shine thanks to seemingly endless databases which can be searched within seconds – no matter how educated the human opponents are.

The intelligence of the Watson system does not lie in the knowledge itself, but in the algorithms responsible for understanding the questions. In Jeopardy, each term (called the ‘answer’ in the rules) is ‘defined’ without naming it directly. The candidates have to guess it (using a ‘question’). So the given definition needs to be interpreted and understood correctly in order to give the correct answer.

Watson had to extract the relevant key words from the natural language before it could match them with its database. This is where human players are at an advantage: Natural language does not follow such strict rules as required for a fail-safe analysis by a deterministic machine, but humans can grasp the meaning of a sentence relatively quickly and reliably.

This lead to some misinterpretations which might have seemed bizarre to the viewers, i.e. the artificial intelligence made mistakes which would never occur with a human. These answers which completely missed the point of the question remained few, though. So in the end, the computer won against its human opponents by a large margin. The artificial intelligence had proven to be more than adaequate in order to utilise its superior knowledge of facts.

A similar application case, but slightly different with respect to the importance of pure knowledge and intelligent decision algorithms, are chess computers. In this clearly defined playing field with programmable rules, no human player can compete against specialised computers anymore.

Still, this requires knowledge of many games and strategies stored in vast databases which the computer can access. Nevertheless, this part of the whole package plays only a smaller role: Chess is different from Jeopardy, because there are no clearly ‘right’ or ‘wrong’ decisions which can be confirmed or refuted immediately. A good chess player needs lots of knowledge, but games are eventually won by clever and maybe also original (i.e. intelligent) planning.

So even more so than with Watson, the important question about Deep Blue and its siblings is how these deterministic ‘calculators’ come to their decisions. Which piece should be moved to where under which circumstances in order to put pressure on the opponent?

Mathematical Basics: The Section You Will Probably Skip

Decision Theory

Decision theory defines a group of methods and algorithms which are concerned with the evaluation of input values according to predefined criteria. Factors relevant to the decision are identified and used in a mathematical formula which produces a result which then will be classified according to the set of possible results.

This can be done in various ways. Some classifiers use rules, others work on the stochastic level directly, i.e. they juggle with numbers. Some classifiers are more intuitive than others – but there is no direct correlation with the quality of the results. The intended purpose of the classification also has to be taken into account for the selection.

Modelling

The function by which an artificial intelligence classifies inputs and makes a decision unfortunately cannot be taken for granted. There are roughly three broad approaches to tackle the preparation of the function definition.

Expert Knowledge

In many fields of application, human experts which are perfectly capable of making good decisions already exist. Their knowledge can be manually transferred into an automated decision model if the decision criteria can be described and abstracted. Usually, the way to try this is decision rules which come closest to human verbalisation skills.

Models produced this way can be well comprehensible and verifiable. This, however, can be the biggest disadvantage of this approach at the same time: If the presumed experts name non-ideal criteria or if they attribute wrong weights, the decision model will be inherently weak. This weakness is not detectable or provable. This is because there is no mistake in the program code implementing the model: The mapping of the model between the abstract, mathematical/logical level and technical implementation is already exact. The problem lies in the root itself which is not testable, because its creation has been based on subjective evaluation and human verbalisation as opposed to a formal approach.

Also, the approach has got its limits: Decision models quickly get very complex. Transferring this complexity into a model ‘by hand’ is virtually impossible at some point.

Empirical Analysis

If a direct translation of expert knowledge is not possible, maths can still help. Data Mining is the buzzword denoting a set of techniques which usually boil down to statistical analysis of given data.

Large logs of human decisions are the prerequisite. These can be analysed by pattern recognition algorithms in order to find, identify and extract typical behaviour patterns automatically and – as well as possible – abstracted. Sometimes (depending on the specific nature of the algorithm), this is also called Clustering which means the automatic recognition of groups within a data set.

Apart from the requirement of huge amounts of data, the biggest disadvantage of using the Data Mining approach is that we have to be aware that it is not even an attempt to find an ‘ideal’ decision. Instead, the goal is to reproduce the original results as closely as possible. So the assumption is that the humans whose decisions have been analysed acted ‘perfectly’.

On the other hand, this is not necessarily always a disadvantage. It matches the definition of artificial intelligence from the beginning exactly: It is the reproduction of human behaviour including all of its weaknesses. Whether this is acceptable depends on the nature of the application.

Another potential problem is that all rules which can only implicitly be found within the data will not be translated into explicit rules in the automatically created models. For example, this could be certain thresholds. If an artificial intelligence is supposed to control a machine which only takes discrete values on a scale from 1 to 10 as inputs, some AI models might still suggest non-integer values. Such conditions (in the example: only integer values) might need to be added to the model afterwards manually.

Adaptive Systems

Decision models, no matter how they have been created initially, do not need to be static. Decision data from productive runs can be fed back into the system to adapt the model to newly discovered knowledge patterns.

For example, a chess computer could save all the games played against it. If the artificial intelligence lost the last game and if the human opponent now tries to win again the same way, the AI could now react differently, because it might have learned that its previous tactic apparently did not succeed.

The danger associated with a system learning in an unsupervised way is that non-ideal decision patterns could reinforce themselves over time. The system adapts to the type of data which it receives. If, for example, the very first data sets are not representative, they nevertheless emboss themselves especially strong – which leads to worse results later on. This is due to an avalanche effect: If a system is initialised badly, the derived decision patterns will be applied in practice which leads to these patterns being reinforced, because an unsupervised system will usually use the application of known patterns as a reassurance factor.

Example

The following is supposed to be a simple example of translating ‘expert knowledge’ into a usable model for a chess computer. The model should be static.

One common way to introduce the different pieces to beginners is to attribute a relative value to them.

| Figure | Value |

|---|---|

| Pawn | 1 |

| Knight | 3 |

| Bishop | 3 |

| Rook | 5 |

| Queen | 9 |

| King | ∞ |

This can be used – by both humans and artificial intelligences – as a basis for the decision which of the own pieces to move. A complete decision model could look like this:

- Determine all (according to the rules) possible moves of all of one’s own pieces.

- Link a value V(b) to each of these moves which corresponds to the value of the opponent’s piece which would be captured in the process (if no enemy piece is captured: 0).

- Link a value V(w) to each of these moves which corresponds to the value of one’s own piece which would end up as ‘non-covered’ (i.e. on a square which none of one’s own pieces could reach in the next turn) and which could be captured by an enemy piece. If more than one piece is not covered, the highest individual value is used.

- For each move, calculate the difference G = V(b) – V(w).

- Select the move with the maximum G. If more than one candidate move exists with the same value of G, select the one with maximum V(b). If there is still more than one candidate, select the first one from the list.

- Commit the selected move.

This verbal description of the model is programmable and deterministic. Special attention has to be on the additional rules within the fifth step: Every possible conflict of having more than one candidate for the decision has to be resolved by the rules – even a random selection is a legitimate last resort. It is a prerequisite of every decision model that no undefined (and therefore undecidable) state ever occurs.

The optimisation criterion which this model is based on and which defines the ‘game strategy’ which this artificial intelligence would apply is:

V(b) – V(w) = max

The second sentence of the fifth step makes it into a pure elimination strategy: If the possibility to capture an enemy piece with a positive or neutral value balance exists, this will be selected.

The optimisation is purely local from a temporal point of view: Only the current state of the board will be taken into account. Neither the game history nor possible consequences further into the future than the following move will be taken into account. This artificial intelligence does not have a ‘plan’ in the classic sense. For example, it would never sacrifice highly valued pieces for the sake of a later tactical advantage.

The AI would also run into problems achieving checkmate, because a systematic close-in on the king over more than one move is not covered. If the AI gets the possibility to capture the enemy king, it will do so, however, because of the infinite value attributed to this piece. Knowledge about the game’s ultimate goal is therefore covered adaequately by the model.

Due to the constraints, this AI would not be very competitive even against a beginner. However, it would be capable of participating in a chess game following the rules and making decisions which can be verified and understood. So the model does belong to the class of artificial intelligences, because it does make autonomous decisions – even if the AI will not be considered a strong player. Keep in mind that human players needed to start learning the game at some point, too, and in that phase, models like this often play a major role in the decisions, too.

Of course, this model could be extended. One directions, for example, would be to also take the quality of the own’s pieces cover. The assumption of the basic model is that the opponent will never capture figures which are covered. However, in actual games, it is common practice to have longer runs of exchanging pieces back-to-back.

To get this into the model, the value of the piece V(w) which is covered could be correlated with the value V(w2) of the piece which is covering. For example, the difference V(w2) – V(w) could be used: This time, the difference would have to be minimised instead of maximised, i.e. covering pieces with a high value using pieces with low value would be preferred.

However, there would be more factors: The number of one’s own pieces which are covering one particular piece, the number of enemy pieces which are threatening one’s pieces, their values etc. The beautifully one-dimensional model described above would quickly extend into n dimensions. This goes too far in the scope of this article, but the way to define this extended model would still be the same one.

For Those Who Really Want to Learn More

As mentioned before, there are many difference methods and sub-topics in this field of maths. Each of them has been the subject of whole books. Each of them can also be interesting to learn about in detail, but this article will not cover these details. Instead, here is a list of cues to get you started:

Maximum-A-Posteriori (MAP) – Classification

Maximum-Likelihood (ML) – Classification

Bayes Classification

Decision Trees

Neural Networks

Nearest Neighbour Classification (‘lazy’)

Regression Analysis

Fuzzy Logic

Successful (?) Artificial Intelligence

In this section, some examples from the world of computer games will show where artificial intelligence implementations work well or where they fail. Also, there will be some examples of games which do not use artificial intelligence at all, but depend on non-intelligent global rules instead. So the first question is: Where do we find artificial intelligence at all and which games simply use repeating, non-intelligent standard algorithms?

Board Games: Highs and Lows

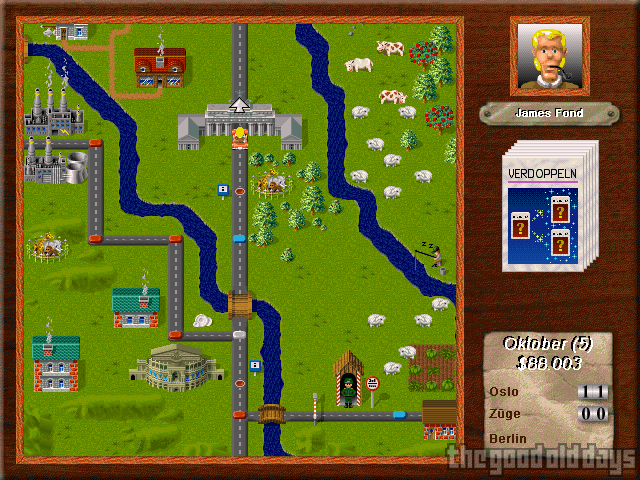

The chess example showed that games with a clearly defined and limited scope of possible actions and a small ruleset can be handled pretty well by artificial intelligences. An artificial intelligence which can be programmed in a fairly trivial way, but which is nevertheless very effective in game terms can be found in Dr. Drago's Madcap Chase. In this game, it is each player’s goal to reach randomly selected capitals on a map of Europe before the opponents do – only via pre-defined roads.

The optimisation criterion is clear: Find the shortest route between the current location and the target capital. This mathematical problem can be solved in a deterministic way with Dijkstra’s Algorithm . Another dimension is added by the intermediate squares the player can land on: Some have positive, some have negative effects. Their value could, for example, be integrated into the model by adding summand of ±1.

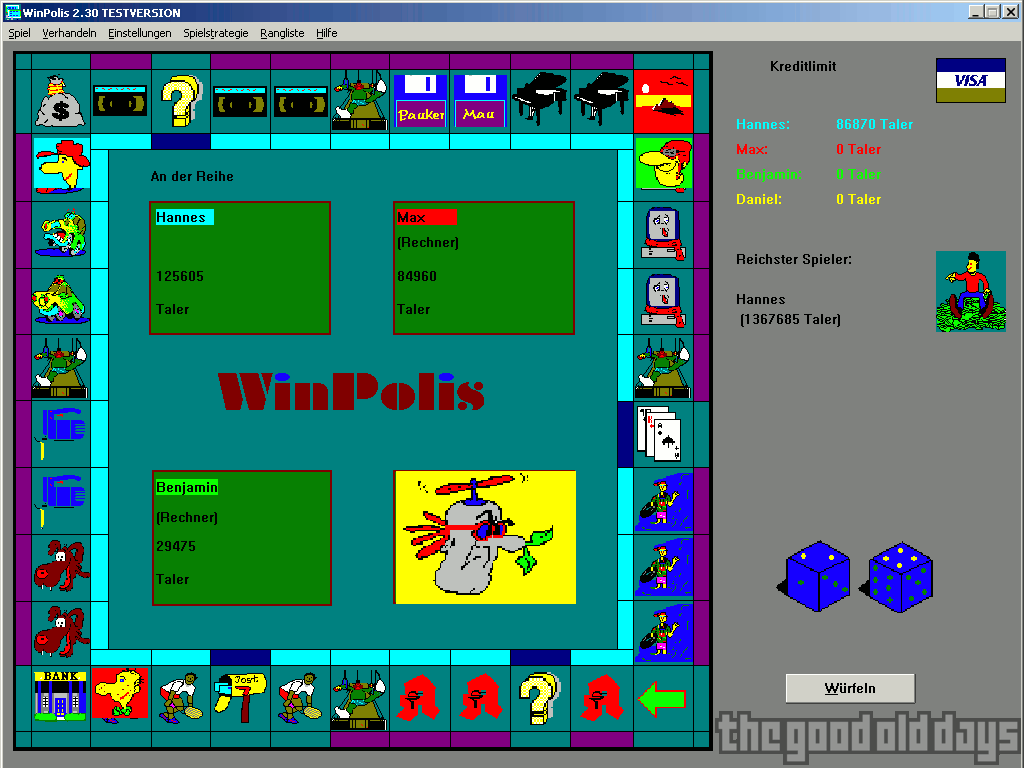

However, even seemingly simple optimisations do not always succeed. Winpolis is a computer version of Monopoly. The computer-controlled players seem to be quite competitive at first. However, it quickly becomes clear that their decisions are made on purely local (short-sighted) terms: Offers to buy their streets for cash are accepted if the amount of money offered exceeds a multiple of the original price (i.e. the perceived value).

This makes sense based on local optimisation: Street X is worth £5000 and the owner receives an offer of £20000 – with a profit of £15000, this is a good deal. However, after the sale, the new owner will now generate income with this street; even the former owner will now have to pay rent when he lands on the square. £15000 could be the typical amount someone has to pay for landing there once after the street has been fully developed. So with some bad luck, the profit might be lost again after only one round. So Winpolis uses an artificial intelligence, but not a very strong (effective) one.

Simulated Humans: Partial Success

Little Computer People is widely considered a classic in the field of artificial intelligence application outside the scientific community. In this ‘game’, we observe a virtual human in his home: He gets hungry, so he opens the fridge and prepares something to eat. His dog needs to go outside, so he takes it for a walk. So this is an attempt to simulate actual human behaviour. The role of the actual human in front of the screen is minimal: Interaction is limited to providing suggestions to the virtual human via text input. Depending on the current priorities of the virtual human, these suggestions/commands will be carried out or not.

Even though the advertisements of the time promised differently, Little Computer People obviously cannot withstand a scientific analysis. The autonomy which can be observed in the virtual human being turns out to be severely limited, the freedom of action even more so. It is backed by an artificial intelligence – this becomes clear as soon as a command from the human player is rejected. The AI only covers a small spectrum, though, and cannot be considered sufficient in Turing’s sense. However, this claim to scientific standards most likely only existed in the funny ads anyway.

Virtually the same concept was taken up again 15 years later by The Sims. Apart from gameplay-related extensions (like the opportunity to build and arrange the house), the basic idea is exactly the same as in Little Computer People: Interaction between the player in front of the screen and a virtual character in the game. What is especially interesting about The Sims in the scope of this article is that it makes most of the decision criteria used internally transparent: Attributes of the characters like ‘hunger’ or ‘fun’ are displayed at the bottom of the screen. Different activities change the values of one or more of these attributes (and this is also documented within the game): Eating decreases hunger, but it increases the ‘bladder’ value. Using the outdoor swimming pool is fun, but it decreases ‘energy’. The decisions what the character will do if the player leaves him alone – and also the question whether commands by the player will be followed or not – are based on these values. If a need becomes too urgent, it will override anything else in the artificial intelligence model and the character will decide on its own how to resolve the pressing situation.

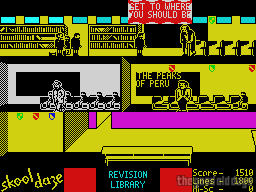

Skool Daze is less ambitious in this regard, but nevertheless provides mechanics sufficient for its cause. About twenty simulated people are moving through the halls of a virtual school building. They seem to be following their daily schedules in an intelligent way: Lessons, breaks and everything else which belongs to a school day can be observed. The player takes over the role of one pupil who tries to nick his own file from the school safe – for that purpose, the teachers have to be tricked into revealing the combination. It is essential, however, to keep one’s head down and appear to follow the regular routine of the school.

Although there are so many characters in the game which all seem to ‘act’, there is reasonable doubt that it actually uses an artificial intelligence at all. None of the visible actions require autonomous decisions by the characters; most likely, everything is controlled by a global, time-based event system, i.e. a ‘god function’ which controls all characters at once. The first lesson starts? Move teacher A to class room 1, teacher B to class room 2 and so on.

This is not an artificial intelligence, because these behaviour patterns are pre-programmed; they are not decided ‘live’ based on the current situation. Some more simple reactions are added to feed the life-like appearance: If a pupil stands up in the middle of a class, the teacher will react: ‘Sit down!’. This shows that artificial intelligence is not always necessary to simulate a ‘living’ world. A well-done static simulation, as found in Skool Daze, can be better than a failed attempt at artificial intelligence.

Reaction Time: A Tactical Advantage

Apart from endless amount of data, which helps in quiz games like Jeopardy, computer have got another major advantage over humans: Once they have their decision models, they can apply them within a split-second. A human always has to assess the situation first – a computer can do this instantly. This is an advantage in all games in which reaction time is a major success factor.

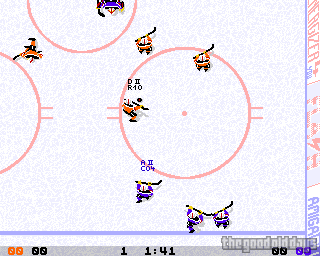

A typical genre where this is the case is sports games. In simulated team sports games like football or ice hockey, all the evasion techniques of the player which controls the ball have to be followed and if the opportunity comes, quick action has to be taken to steal the ball. Shots may be approaching the goal at insane speed – if one has to stop and think what to do and which key to press first, it most likely to late due to the non-ideal hand-input-device interface. None of this is a problem for an artificial intelligence.

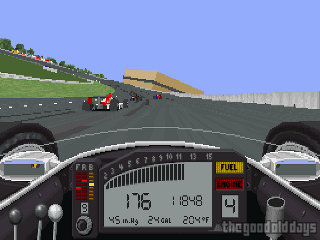

A curve is approaching fast in a racing game? A human player can only depend on the feeling in her guts or her experience. An artificial intelligence, on the other hand, knows the mathematical description of the curve (the way it bends). This knowledge can be used to apply all possible methods of optimising the exact route on the track – and Control Theory can calculate perfect reactions to unforeseen events. It is not just that the computer knows how to calculate all of this – it can also do so in time, i.e. before reaching the curve. The examples show: When it comes to (calculable) timely reactions, artificial intelligence is at an advantage.

Fast Strategists: This is Supposed to be Intelligent?

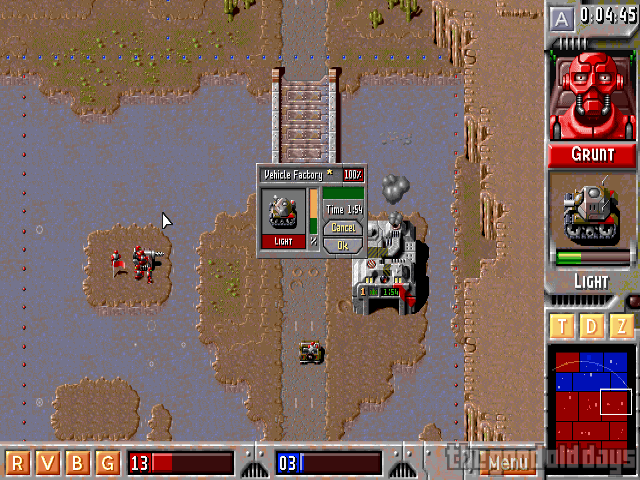

This effect also affects less athletic types of competition. So-called real time strategy, i.e. war games in which the players cannot think calmly think turn-by-turn, started out relatively promising with respect to artificial intelligence: Early genre entries like Modem Wars or Command H.Q. offered quite a challenge to the human player.

The one game which defined the genre more than any other, however, went into a different direction: In Dune 2, there is no serious AI to speak of. Instead, the player is threatened by the computer-controlled opponents, because they do not start off with nothing: While the player has to build up production means and armies, the computer already owns these. In fixed time intervals, the game also just ‘gives’ the computer new soldiers. However, they are used in the most primitive way: They always appear in exactly the same spots and move on the same pre-defined tracks. Once the player understands this scheme, it is no danger anymore, because it is trivial to ‘trick’ the computer to always walk right into ‘traps’ – it never changes its tactic after failure.

Why is this still a threat to the player? On the one hand, there is the rule-based advantages which have already been mentioned. On the other hand, it is the same effect as in the sports genre: While the human player first needs to be made aware by optical or aural means that a decision is required at all and then needs to carry out an action via a relatively clumsy mouse interface, the computer can command a hundred panzers at the same time. So an artificial intelligence is used for control of the military units on the lowest level (‘Turn your gun right and fire at the enemy!’) even in Dune 2 and its clones – but it is not responsible for any actually strategic decisions.

There are exceptions to this rule. Z is a humorously cynical war game in which a map made of ‘sectors’ needs to be conquered. All of the actions of the computer-controlled armies are visible and visibly intelligent: An artificial intelligence is at work here which seriously tries to play against the human opponent using the same means as him. Quite impressive compared to the usual contemporary standard in the genre, but of course, this AI also has its strict limits. These show once the hectic first phase of a level is over: Once the player has managed to capture half of the sectors and the game has entered a relatively stable tactical situation, the AI does not manage to come up with any ideas or plans which could maintain the threat level. Also, even this game uses one of the typical cheats of the genre: The computer starts with a stronger army in order to increase the difficulty level for the human player.

Unfortunately, Z remained one of the very few attempts to produce such a game with an artificial intelligence covering the complete gameplay; Dune 2 remained the blueprint from which almost all new games were designed. Games like Mech Commander, for example, solely relied to ‘scripted’ events in order to make the levels thrilling and hard: As soon as the player’s robots reach a certain spot on the map, new opponents will suddenly appear in an unexpected location so that the former plans of the player might be thwarted. This has nothing to do with artificial intelligence at all and from the player’s point of view, it is also hardly related to strategy anymore – success is achieved by memorising the events.

Strategic Failures

There are (too) many examples of artificial intelligences present in commercial products which fail at their task completely. A very interesting one is Diplomacy, Avalon Hill’s conversion of their own famous board game. Diplomacy is all about what the title suggests. However, as far as the computer controlled countries are concerned, there is no diplomacy in this computer version.

Die evident explanation is that the programmers were not able to implement a sensible artificial intelligence functioning in diplomatic negotiations with humans. This assumption is fed by the other behaviour of the computer-controlled opponents: Although the number of possible actions in Diplomacy is quite low (which is considered one of the greatest strength of the game), and all of them could easily be implemented into an AI, there are bizarrely obvious ludicrous decisions – sometimes, the computer will even ‘forget’ to move its pieces at all. Has a random decision generator taken the place of the AI?

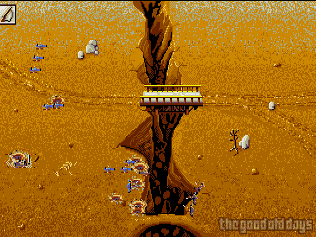

Not quite as bad, but still quite hard to understand: the control routines in North & South. Most of the elements of artificial intelligence are adaequate, even if they are not particularly strong. However, in battles involving a river or a chasm, it fails miserably: The computer uses a search algorithm to bring its soldiers towards the ones of the human. The shortest distance between two points is a straight line between the two. No, the AI is not quite as bad as just following this principle, but almost. Placing one’s own soldiers closely on one side of the chasm, the computer will not take the bridge (although it is perfectly intact) to cross, but it will send its small men right towards their enemies – i.e. right into the chasm, resulting in immediate death. Sure, the game is anything but a serious simulation of war, but this most certainly was not intended.

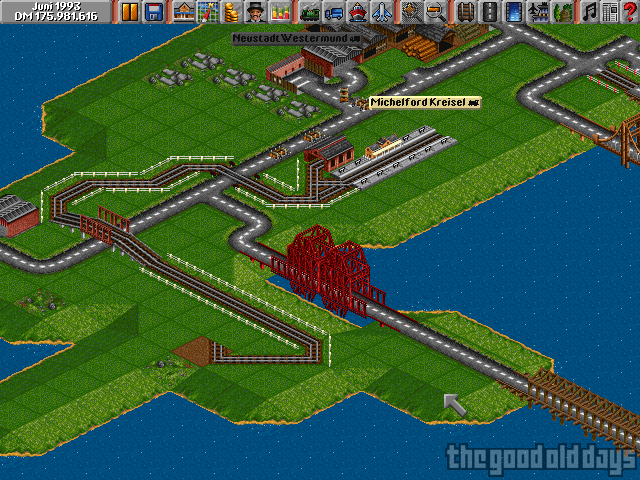

Though pathfinding algorithms can still get worse: In the civil sector, Transport Tycoon provides some of the biggest laughs. In this game, human and computer players attempt to build transport connections via air, land and water. With aircraft and ships, it is not as noticable, but what is happening on land lines is completely incomprehensible.

What could possibly be the reason to coil railroad tracks in such ways that they get back to the starting point repeatedly? How can it be that one tiny piece of track placed strategically by a human stops all building progress of the computer who is not able to plan a route ‘around’ it?

Where Do We Stand, or: For Which Games Should You Still Invite Friends Over?

The question how well artificial intelligence works still depends very much on the application – in spite of all the progress of the last decades. AI already looks very good in fields which can be mastered mainly by access to huge amounts of data. Similarly, applications which depend on fast reaction times usually work well: Automated decision-making by a computer is always faster than that of a human being. So even if the decisions might not be absolutely perfect, the advantages more than make up for that.

It gets more complicated once the ‘rules’ to be covered are less clear. More freedom concerning the available options and more freedom concerning the input variables to consider make it much harder to develop decision models at all and even then, the results might be far from good. This is where the scientific community still has a lot of work to do.

Until then, commercial computer games rely on non-intelligent ways to raise the challenge: Computer-controlled players have rule-based advantages, start out with additional resources, they get ‘gifts’ and so on. Such tactics may make these games harder for the human player, but they are a temporary solution at best – when this ‘cheating’ becomes noticeable, it turns into a major source of frustration almost immediately.

However, before anyone even considers implementing an artificial intelligence, which then could be perceived as weak by the human players, one has to ask oneself whether there are other ways. Many game genres can work without any involvement of an artificial intelligence – and that is not just the ‘simulation’ of lifeless rocks.

For example, the Jeopardy computer game mentioned at the beginning has no artificial intelligence at all. When it is the computer player’s turn, a random number generator decides whether the given answer should be correct or not – without even touching the complicated field of automatic text parsing and interpretation. For this application scenario, this is sufficient.

So, in conclusion, it should not always be the goal to get the ‘best’ out of it. It is much more important and it makes much more sense to give thought to what is appropriate.